In this blog post, we will take a closer look at the development of the OBS Background Removal Plugin, discussing its key components, functionalities, and the process behind building it. The plugin was created to address the need for virtual green screen and background removal capabilities in OBS (Open Broadcaster Software), a popular live streaming and recording software. With over 500,000 downloads and ongoing contributions from various developers, the OBS Background Removal Plugin has gained significant traction in the streaming community. Whether you’re interested in understanding how this plugin works or considering building a similar plugin yourself, this walkthrough will provide valuable insights.

Please refer to the GitHub repo for the full code: https://github.com/royshil/obs-backgroundremoval as I cannot put all code here, just the “interesting parts”.

Plugin Architecture

The OBS Background Removal Plugin is written in C++ and follows the plugin template provided by the OBS project. The main entry point for the plugin is the “plugin-main.cpp” file, which registers the plugin’s functions with OBS. The template provided by OBS serves as a foundation for building plugins, offering essential workflows for building and publishing the plugin across multiple operating systems.

The plugin-main.cpp file only gives the module a name and registers the plugin, for example:

MODULE_EXPORT const char *obs_module_description(void)

{

return obs_module_text("PortraitBackgroundFilterPlugin");

}

extern struct obs_source_info background_removal_filter_info;

bool obs_module_load(void)

{

obs_register_source(&background_removal_filter_info);

blog(LOG_INFO, "plugin loaded successfully (version %s)", PLUGIN_VERSION);

return true;

}

This is the struct that registers the functions of the plugin with OBS:

struct obs_source_info background_removal_filter_info = {

.id = "background_removal",

.type = OBS_SOURCE_TYPE_FILTER,

.output_flags = OBS_SOURCE_VIDEO,

.get_name = filter_getname,

.create = filter_create,

.destroy = filter_destroy,

.get_defaults = filter_defaults,

.get_properties = filter_properties,

.update = filter_update,

.activate = filter_activate,

.deactivate = filter_deactivate,

.video_tick = filter_video_tick,

.video_render = filter_video_render,

};

Video Rendering and Effects

The core functionality of the plugin resides in the “background-filter.cpp” file. Within this file, the “video-render” function is responsible for rendering the video and utilizing the GPU for efficiency. The plugin leverages OBS’s effects system, and the video rendering process involves blending the input RGB image with the background mask using a custom effect written in HLSL (High-Level Shader Language). This blending effectively removes the background while preserving the foreground.

This is part of the code for the rendering:

gs_eparam_t *alphamask = gs_effect_get_param_by_name(tf->effect, "alphamask");

gs_eparam_t *blurSize = gs_effect_get_param_by_name(tf->effect, "blurSize");

gs_eparam_t *xTexelSize = gs_effect_get_param_by_name(tf->effect, "xTexelSize");

gs_eparam_t *yTexelSize = gs_effect_get_param_by_name(tf->effect, "yTexelSize");

gs_eparam_t *blurredBackground = gs_effect_get_param_by_name(tf->effect, "blurredBackground");

gs_effect_set_texture(alphamask, alphaTexture);

gs_effect_set_int(blurSize, (int)tf->blurBackground);

gs_effect_set_float(xTexelSize, 1.0f / width);

gs_effect_set_float(yTexelSize, 1.0f / height);

if (tf->blurBackground > 0.0) {

gs_effect_set_texture(blurredBackground, blurredTexture);

}

obs_source_process_filter_tech_end(tf->source, tf->effect, 0, 0, "DrawWithBlur");

gs_blend_state_pop();

gs_texture_destroy(alphaTexture);

gs_texture_destroy(blurredTexture);

The HLSL effect is very simple. It only multiplies the RGB values by the alpha values from the background mask:

float4 PSAlphaMaskRGBAWithoutBlur(VertDataOut v_in) : TARGET

{

float4 inputRGBA = image.Sample(textureSampler, v_in.uv);

inputRGBA.rgb = max(float3(0.0, 0.0, 0.0), inputRGBA.rgb / inputRGBA.a);

float4 outputRGBA;

float a = (1.0 - alphamask.Sample(textureSampler, v_in.uv).r) * inputRGBA.a;

outputRGBA.rgb = inputRGBA.rgb * a;

outputRGBA.a = a;

return outputRGBA;

}

Neural Network Inference

To perform background removal, the plugin employs a neural network for image processing. The “video tick” function retrieves the RGB(A) input color image, which is then processed through the neural network in the “run filter model inference” function. This process involves converting the image to RGB, resizing it to the network’s input size, applying any necessary preprocessing, running the inference, and converting the output to the expected format.

Abstracted Model Class

The plugin incorporates various neural network models for different background removal scenarios. These models are abstracted using a parent class called “model,” which handles shared functionalities such as file path retrieval, input/output names, and buffer allocation. Each specific model subclass implements model-specific preprocessing and postprocessing logic, catering to the requirements of different neural network architectures.

The Model.h file holds the abstract Model class:

class Model {

private:

/* data */

public:

Model(/* args */){};

virtual ~Model(){};

const char *name;

#if _WIN32

const std::wstring

#else

const std::string

#endif

getModelFilepath(const std::string &modelSelection)

{

//...

}

virtual void populateInputOutputNames(const std::unique_ptr<Ort::Session> &session,

std::vector<Ort::AllocatedStringPtr> &inputNames,

std::vector<Ort::AllocatedStringPtr> &outputNames)

{

//...

}

virtual bool populateInputOutputShapes(const std::unique_ptr<Ort::Session> &session,

std::vector<std::vector<int64_t>> &inputDims,

std::vector<std::vector<int64_t>> &outputDims)

{

//...

}

virtual void allocateTensorBuffers(const std::vector<std::vector<int64_t>> &inputDims,

const std::vector<std::vector<int64_t>> &outputDims,

std::vector<std::vector<float>> &outputTensorValues,

std::vector<std::vector<float>> &inputTensorValues,

std::vector<Ort::Value> &inputTensor,

std::vector<Ort::Value> &outputTensor)

{

//...

}

virtual void getNetworkInputSize(const std::vector<std::vector<int64_t>> &inputDims,

uint32_t &inputWidth, uint32_t &inputHeight)

{

// BHWC

inputWidth = (int)inputDims[0][2];

inputHeight = (int)inputDims[0][1];

}

virtual void prepareInputToNetwork(cv::Mat &resizedImage, cv::Mat &preprocessedImage)

{

preprocessedImage = resizedImage / 255.0;

}

virtual void postprocessOutput(cv::Mat &output)

{

output = output * 255.0; // Convert to 0-255 range

}

virtual void loadInputToTensor(const cv::Mat &preprocessedImage, uint32_t inputWidth,

uint32_t inputHeight,

std::vector<std::vector<float>> &inputTensorValues)

{

//...

}

virtual cv::Mat getNetworkOutput(const std::vector<std::vector<int64_t>> &outputDims,

std::vector<std::vector<float>> &outputTensorValues)

{

//...

}

virtual void assignOutputToInput(std::vector<std::vector<float>> &,

std::vector<std::vector<float>> &)

{

}

virtual void runNetworkInference(const std::unique_ptr<Ort::Session> &session,

const std::vector<Ort::AllocatedStringPtr> &inputNames,

const std::vector<Ort::AllocatedStringPtr> &outputNames,

const std::vector<Ort::Value> &inputTensor,

std::vector<Ort::Value> &outputTensor)

{

//...

}

};

This class assumes BHWC data format, but some models are BCHW. To handle that we have the ModelBCHW class which overrides some of the functions that have to do with loading and unloading the tensors from the inference session.

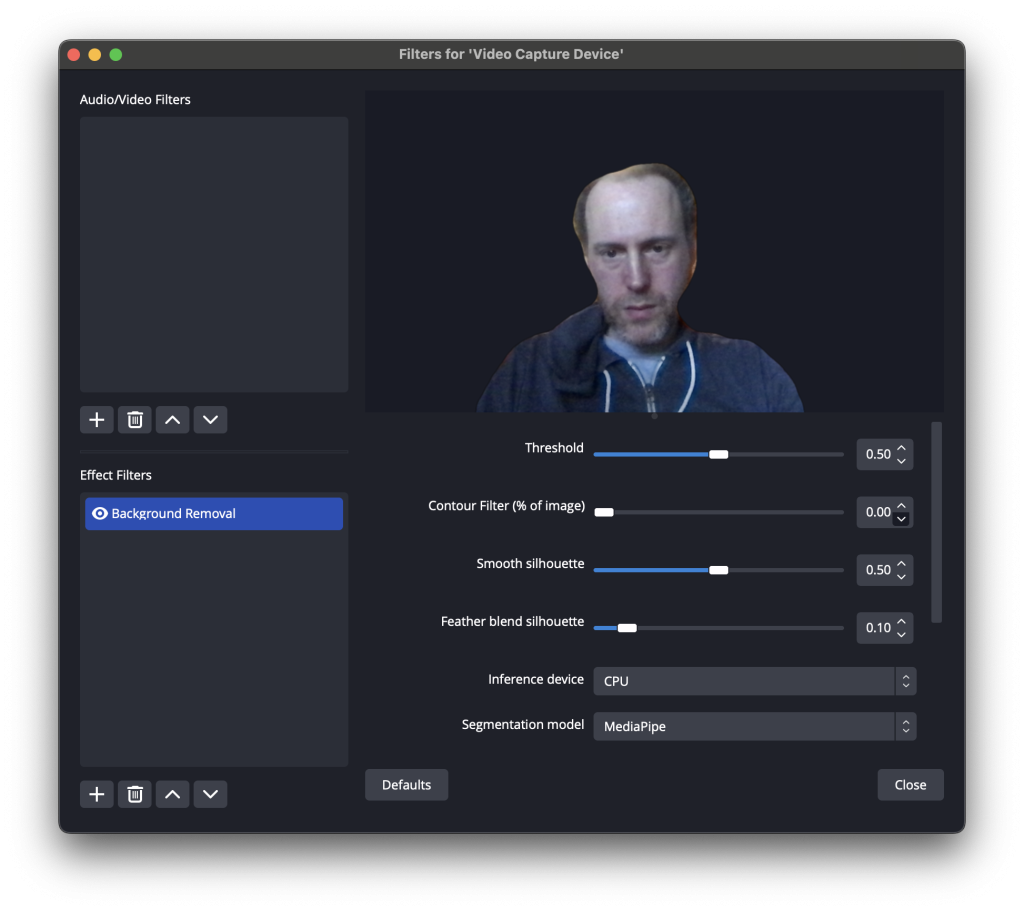

Plugin Properties and Customization

The plugin offers a range of properties and customization options to enhance the user’s control over the background removal process. These properties include threshold adjustments, preprocessing / postprocessing operations (e.g., smoothing, feathering), GPU options, model selection, and more. By leveraging the plugin’s properties, users can fine-tune the background removal based on their specific streaming or recording needs.

Defining properties on the plugin static obs_properties_t *filter_properties(void *data) function will create UI elements:

All of the functions have access to a data pointer that holds important data that we need for processing the video:

struct background_removal_filter : public filter_data {

float threshold = 0.5f;

cv::Scalar backgroundColor{0, 0, 0, 0};

float contourFilter = 0.05f;

float smoothContour = 0.5f;

float feather = 0.0f;

cv::Mat backgroundMask;

int maskEveryXFrames = 1;

int maskEveryXFramesCount = 0;

int64_t blurBackground = 0;

gs_effect_t *effect;

gs_effect_t *kawaseBlurEffect;

};

This for example is part of the function that gets the UI properties and stores them in the data struct:

static void filter_update(void *data, obs_data_t *settings)

{

struct background_removal_filter *tf = reinterpret_cast<background_removal_filter *>(data);

tf->threshold = (float)obs_data_get_double(settings, "threshold");

tf->contourFilter = (float)obs_data_get_double(settings, "contour_filter");

tf->smoothContour = (float)obs_data_get_double(settings, "smooth_contour");

tf->feather = (float)obs_data_get_double(settings, "feather");

tf->maskEveryXFrames = (int)obs_data_get_int(settings, "mask_every_x_frames");

tf->maskEveryXFramesCount = (int)(0);

tf->blurBackground = obs_data_get_int(settings, "blur_background");

//...

Conclusion

The OBS Background Removal Plugin has emerged as a valuable tool for content creators seeking virtual green screen capabilities and seamless background removal in OBS. Through a well-structured architecture, efficient GPU utilization, and integration with neural network models, the plugin delivers high-quality results in real-time. By exploring the code walkthrough provided above, developers can gain insights into building similar plugins and harness the power of OBS’s plugin system.

As the plugin continues to evolve and improve, its widespread adoption within the streaming community highlights the value it brings to content creators. If you’re interested in delving deeper into OBS plugin development or learning more about the OBS Background Removal Plugin, refer to the official OBS plugin documentation for comprehensive resources and guidance.